(2024-09-30) ZviM AI And The Technological Richter Scale

Zvi Mowshowitz: AI (AGI) and and the Technological Richter Scale. The Technological Richter scale is introduced about 80% of the way through Nate Silver’s new book On the Edge.

Silver: The Richter scale was created by the physicist Charles Richter in 1935 to quantify the amount of energy released by earthquakes.

It has two key features that I’ll borrow for my Technological Richter Scale (TRS). First, it is logarithmic. A magnitude 7 earthquake is actually ten times more powerful than a mag 6. Second, the frequency of earthquakes is inversely related to their Richter magnitude—so 6s occur about ten times more often than 7s. Technological innovations can also produce seismic disruptions. (technological revolution, huge invention)

Let’s proceed quickly through the lower readings of the Technological Richter Scale.

- Like a half-formulated thought in the shower.

- Is an idea you actuate, but never disseminate: a slightly better method to brine a chicken that only you and your family know about.

- Begins to show up in the official record somewhere, an idea you patent or make a prototype of.

- An invention successful enough that somebody pays for it; you sell it commercially or someone buys the IP.

- A commercially successful invention that is important in its category, say, Cool Ranch Doritos, or the leading brand of windshield wipers.

- An invention can have a broader societal impact, causing a disruption within its field and some ripple effects beyond it. A TRS 6 will be on the short list for technology of the year. At the low end of the 6s (a TRS 6.0) are clever and cute inventions like Post-it notes that provide some mundane utility. Toward the high end (a 6.8 or 6.9) might be something like the VCR, which disrupted home entertainment and had knock-on effects on the movie industry. The impact escalates quickly from there.

- One of the leading inventions of the decade and has a measurable impact on people’s everyday lives. Something like credit cards would be toward the lower end of the 7s, and social media a high 7.

- A truly seismic invention, a candidate for technology of the century, triggering broadly disruptive effects throughout society. Canonical examples include automobiles, electricity, and the internet.

- By the time we get to TRS 9, we’re talking about the most important inventions of all time, things that inarguably and unalterably changed the course of human history. You can count these on one or two hands. There’s fire, the wheel, agriculture, the printing press. Although they’re something of an odd case, I’d argue that nuclear weapons belong here also. True, their impact on daily life isn’t necessarily obvious if you’re living in a superpower protected by its nuclear umbrella (someone in Ukraine might feel differently). But if we’re thinking in expected-value terms, they’re the first invention that had the potential to destroy humanity.

- Finally, a 10 is a technology that defines a new epoch, one that alters not only the fate of humanity but that of the planet. For roughly the past twelve thousand years, we have been in the Holocene, the geological epoch defined not by the origin of Homo sapiens per se but by humans becoming the dominant species and beginning to alter the shape of the Earth with our technologies. AI wresting control of this dominant position from humans would qualify as a 10, as would other forms of a “technological singularity,” a term popularized by the computer scientist Ray Kurzweil.

One could quibble with some of these examples.

Later he puts ‘the blockchain’ in the 7s, and I’m going to have to stop him right there. No.

I’m sending blockchain down to at best a low 6.

Microwaves then correctly got crushed when put up against real 7s.

The overall point is also clear.

The Big Disagreement About Future Generative AI (GenAI)

What is the range of plausible scores on this scale for generative AI?

The (unoriginal) term that I have used a few times, for the skeptic case, the AI-fizzle world, is that AI could prove to be ‘only internet big.’ In that scenario, GPT-5-level models are about as good as it gets

I think that even if we see no major improvements from there, an 8.0 is already baked in. Counting the improvements I am very confident we can get about an 8.5.

I think that 5-level models, given time to have their costs reduced and to be properly utilized, will inevitably be at least internet big, but only 45% of respondents agreed. I also think that 5-level models are inevitable - even if things are going to peter out, we should still have that much juice left in the tank.

Whereas, and I think this is mind-numbingly obvious, any substantial advances beyond that get us at least into the 9s, which probably gets us ASI (or an AGI capable of enabling the building of an ASI) and therefore is at least a 10.0.

Just Think of the Potential

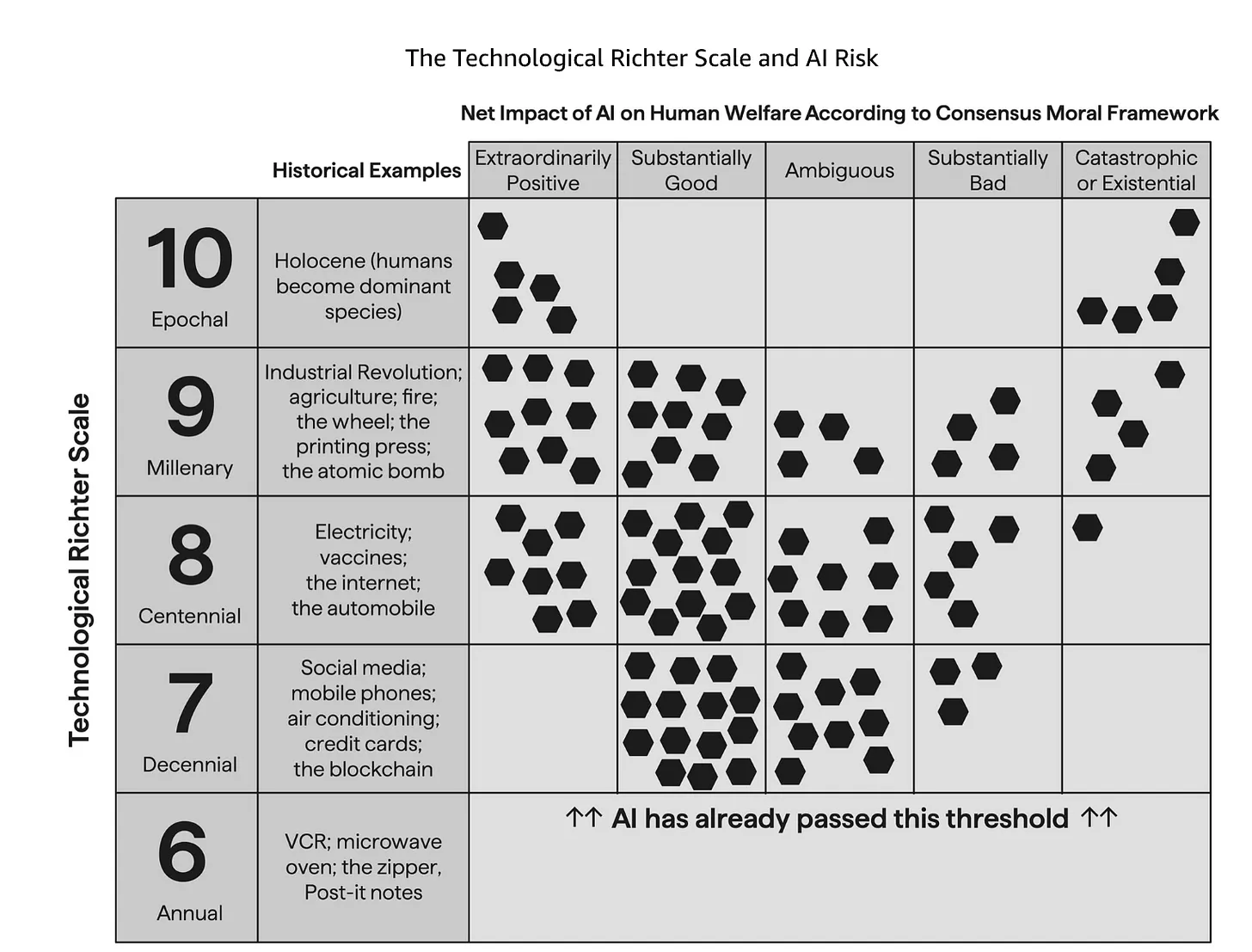

Nate Silver offers this chart:

No, it hasn’t been as impactful as those things at 7 yet, but if civilization continues relatively normally that is inevitable.

What if it does go to 10-level, fully transformational AI? Nate nails the important point, which is that the result is either very, very good or it is very, very bad. The chart above puts the odds at 50/50. I think the odds are definitely against us here. (p(doom))

What are some of the most common arguments against transformational AI?

this is a sincere attempt to list as many of them as possible, and to distill their actual core arguments.

- We are on an S-curve of capabilities, and near the top, and that will be that.

- A sufficiently intelligent computer would take too much [compute, power, data]. (continues with list of 34!)

- I (Bill) think 1-2 are my picks.

A few of these are actual arguments that give one pause. It is not so implausible that we are near the top of an S-curve, that in some sense we don’t have the techniques and training data to get much more intelligence out of the AIs than we already have. Diminishing returns could set in, the scaling laws could break, and AI would get more expensive a lot faster than it makes everything easier, and progress stalls. The labs say there are no signs of it, but that proves nothing. We will, as we always do, learn a lot when we see the first 5-level models, or when we fail to see them for sufficiently long.

you say the true intelligence requires embodiment? I mean I don’t see why you think that, but if true then there is an obvious fix. The true intelligence won’t matter because it won’t have a body? Um, you can get humans to do things by offering them money.

Brief Notes on Arguments Transformational AI Will Turn Out Fine

I tried several times to write out taxonomies of the arguments that transformational AI will turn out fine. What I discovered was that going into details here rapidly took this post beyond scope, and getting it right is important but difficult.

- Arguments that human extinction is fine. (e.g. either AIs will have value inherently, AIs will carry our information and values, something else is The Good and can be maximized without us, or humanity is net negative, and so on.) (list of 13)

I list ‘extinction is fine’ first because it is a values disagreement, and because it is important to realize a lot of people actually take such positions

The rest are in a combination of logical order and a roughly descending order of plausibility and of quality of the best of each class of arguments

The argument of whether we should proceed, and in what way, is of course vastly more complex than this and involves lots of factors on all sides.

A remarkably large percentage of arguments for things being fine are either point 1 (human extinction is fine), point 11 (this particular bad end is implausible so things will be good) or points 12 and 13.

Edited: | Tweet this! | Search Twitter for discussion

Made with flux.garden

Made with flux.garden